Historically, robotic arms have been applied mainly to large scale processes and production lines, with many smaller scale activities remaining largely based on manual work. The resulting robotic cells are generally fully autonomous, process is totally deterministic and locked, and no interaction is possible between operator and the robotic system during the execution. As a result, interactive processes are difficult to handle, and adaptation to process evolutions, even small ones, is very costly and time-consuming. Furthermore, any program evolution needs the expertise of a roboticist, often sub-contracted, which lowers the overall ease of adaptation even more.

To be able to address the « Low volume / High mix » processes, a new approach is needed, that cuts down the programming time, and makes it accessible to non-expert people. The two main hurdles are generally the difficulty to program new behaviors, and to acquire the numerous points and trajectories needed for a given process.

Teaching robots points of interest and complex gestures is cumbersome and time consuming. How can we make it easier and faster?

In the MERGING project, CEA worked on this very topic, and developed a framework named SPIRE (Skill-based Programming Interface for Robotic Environments), that revolves around two core concepts: teaching by demonstration, and skill-based programming. This article gives an insight of this work, with a focus on the teaching part.

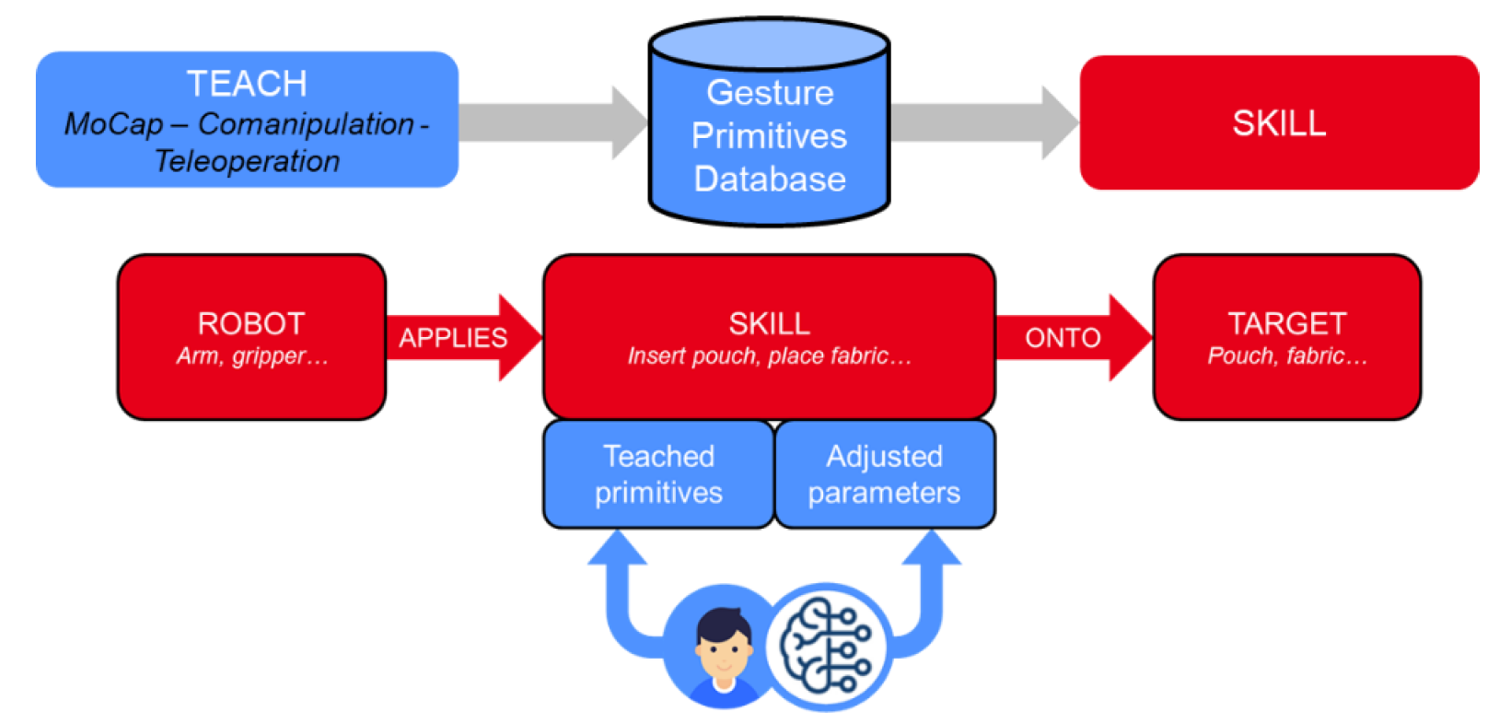

A skill is a high-level task-related robotic function, that allows to provide robotic actions in a meaningful way for the operator, with actions such as “insert pouch, place fabric, unwrap fabric”, and so on. The SPIRE skills framework is designed to provide a convenient and efficient way to program those skills, independently from the brand-specific API provided by the robot.

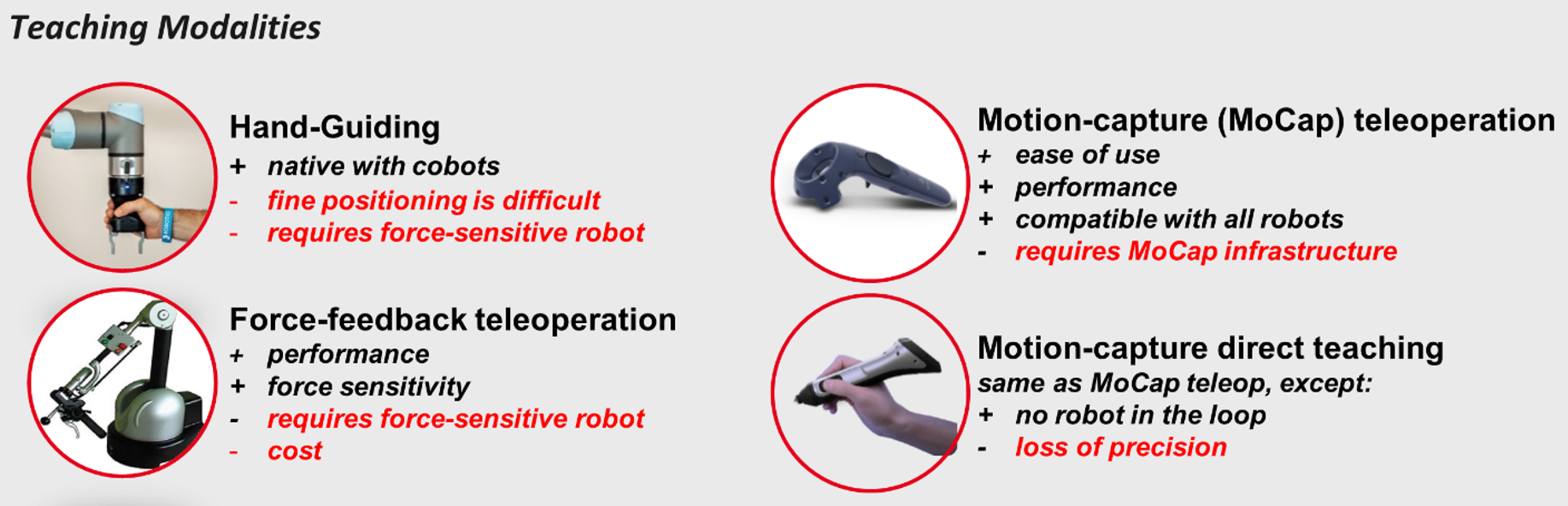

To be applied by the robot, a skill generally needs configuration, mainly from geometric information, such as points of application, gestures or trajectories to follow. In state of the art solutions, the teaching part is usually done using either raw data from CAD, the robot teach pendent, or hand-guiding features.

In the Merging project, we developed a new multimodal teaching module, which allows natural teaching using motion-capture and remote control. This MoCap-based approach is a great way to easily and quickly teach the robot. Motion-capture can be used “offline”, without the robot in the loop, to teach directly in the scene. It can also be used for robot teleoperation, enabling remote interaction of any kind of robots, even industrial robots in a dedicated cell. Each teaching modality has its own advantages and limitations, resumed in the following illustration. The choice will depend upon the situation.

By coupling motion-capture for teaching, and skills for robot capabilities programming, the SPIRE MERGING framework will enhance significally the user experience for collaborative robotics applications.

Baptiste Gradoussoff

Baptiste Gradoussoff is a research engineer and project manager at the Interactive Robotics Laboratory. He joined CEA LIST in 2011. Specialized on the topics of motion control and software for collaborative robotics and tele-manipulation, he steers the laboratory activities for agile robotics and intuitive programming, which goal is to develop efficient solutions for new stakes of manufacturing, such as small batch process automation